Measurement solutions for virtual and augmented reality headsets

AR Headset

The complete AR headset consists of two Near-Eye Displays (NEDs), sensors (such as position sensors), cameras and the eye-tracking unit. The alignment of the two NEDs (stereo alignment) is important to prevent nausea while wearing the headset and to ensure the best image quality.

A complete test of the headset is usually the last step in production.

Test parameters

- Image sharpness (MTF), distortion, lateral chromatic aberration (chief ray angle) across the field of view in VIS and NIR

- Absolute brightness, uniformity and color fidelity

- Measurement of the exit pupil position and eye relief distance

- Measurement of virtual object distance

- Measurement of the alignment of the NEDs to each other: divergence, dipvergence, rotation, and distance of the eyeboxes.

Near-Eye Display

The near-eye display (NED), which comprises a projector and waveguide/combiner, projects the virtual image superimposed with the real environment onto the human eye. Frequently, two NEDs, one per eye, are combined into one headset.Test parameters

- Image sharpness (MTF), distortion, lateral chromatic aberration (chief ray angle) across the field of view in VIS and NIR

- Absolute brightness, uniformity and color fidelity

- Measurement of the exit pupil position and eye relief distance

- Measurement of virtual object distance

Projector

The projector displays an image of the computer-generated parts of the AR view, which is projected onto the user’s eye via the waveguide/combiner. It consists of a display unit (e.g. based on LCOS, micro-LED, laser beam scanning) and lens that project the generated image from a virtual distance.Test parameters

- Image sharpness (MTF), distortion, lateral chromatic aberration (chief ray angle) across the field of view in VIS and NIR

- Absolute brightness, uniformity and color fidelity

- Measurement of the exit pupil position and eye relief distance

- Measurement of virtual object distance

- Measurement of the alignment of optical elements to each other

Assembly

Eye-Tracking Camera (NIR)

AR headsets continuously measure the viewing direction and the distance between the user’s pupils to calculate an accurate virtual image.Test parameters

- Image sharpness (MTF), distortion, lateral chromatic aberration (chief ray angle) across the field of view

- OECF (Opto-Electronic Conversion Function)

- Defective pixels

- Tilt and focusing lens image plane to sensor

Assembly

Waveguide

The waveguide/combiner overlays the real environment with the artificial image of the projector. Both reflection and transmission waveguides in many sizes and geometries can be measured.Test parameters

- Image sharpness (MTF)

- Distortion

- Lateral chromatic aberration (chief ray angle)

- Efficiency

Each of the above across the field of vision, eyebox, object distance, eye relief in reflection, and transparency in VIS and NIR

Exterior Cameras (VIS & NIR)

AR headsets usually have several VIS and NIR cameras for observing the environment, e.g. for object or marker detection.Test parameters

- Image sharpness (MTF), distortion, lateral chromatic aberration (chief ray angle) across the field of view

- OECF (Opto-Electronic Conversion Function), color reproduction

- Defective pixels

- Tilt and focusing lens image plane to sensor

Assembly

“The VR and AR market offers great growth potential. TRIOPTICS is driving this development from the very beginning.”

Dr. Stefan Krey | Chief Technology Officer

VR Headset

The complete headset consists of the VR projector, sensors (such as position sensors), cameras and eye-tracking unit. A complete test of the headset is usually the last step in production.

Test parameters

- Image sharpness (MTF), distortion, lateral chromatic aberration (chief ray angle) across the field of view in VIS and NIR

- Absolute brightness, uniformity, and color fidelity

- Measurement of the exit pupil position and eye relief distance

- Measurement of virtual object distance

- Measurement of the alignment of the NEDs to each other: divergence, dipvergence, rotation, and distance of the eyeboxes.

Exterior Cameras (VIS & NIR)

AR headsets usually have several VIS and NIR cameras for observing the environment, e.g. for object or marker detection.Test parameters

- Image sharpness (MTF), distortion, lateral chromatic aberration (chief ray angle) across the field of view

- OECF (Opto-Electronic Conversion Function), color reproduction

- Defective pixels

- Tilt and focusing lens image plane to sensor

Assembly

VR-Projector

The VR projector consists of a display and projection lens and displays the virtual image to the eye. Usually, a display and 2 projection lenses, one per eye, are combined to form a VR projector. The alignment of the two projection lenses (stereo alignment) is important to prevent nausea when wearing the headset and to ensure the best image quality.Test parameters

- Image sharpness (MTF), distortion, lateral chromatic aberration (chief ray angle) across the field of view in VIS and NIR

- Absolute brightness, uniformity and color fidelity across the field of view

- Measurement of the exit pupil position and eye relief distance

- Measurement of virtual object distance of the projection lens

- Alignment of the two individual projectors to each other: line of sight (divergence, dipvergence)

Assembly

Eye-Tracking Cameras (NIR)

AR and VR headsets continuously measure the viewing direction and the distance between the user’s pupils in order to calculate an accurate virtual image.Test parameters

- Image sharpness (MTF), distortion, lateral chromatic aberration (chief ray angle) across the field of view

- OECF (Opto-Electronic Conversion Function)

- Defective pixels

- Tilt and focusing lens image plane to sensor

Assembly

Projection lens

The projection lens project the 2D image of the display from a virtual object distance that can be perceived by the eye. Pancake, fresnel or free-form lenses are often used to keep the overall height as low as possible.Test parameters

- Image sharpness (MTF), distortion, lateral chromatic aberration (chief ray angle) across the field of view and eyebox in VIS and NIR

- Measurement of the exit pupil position and eye relief distance

- Scattered light (veiling glare)

Assembly

Eye Simulator

In the human eye, light enters through the pupil and is focused onto the retina by a lens. The amount of light hitting the retina is determined by the iris, which controls the diameter and size of the pupil.

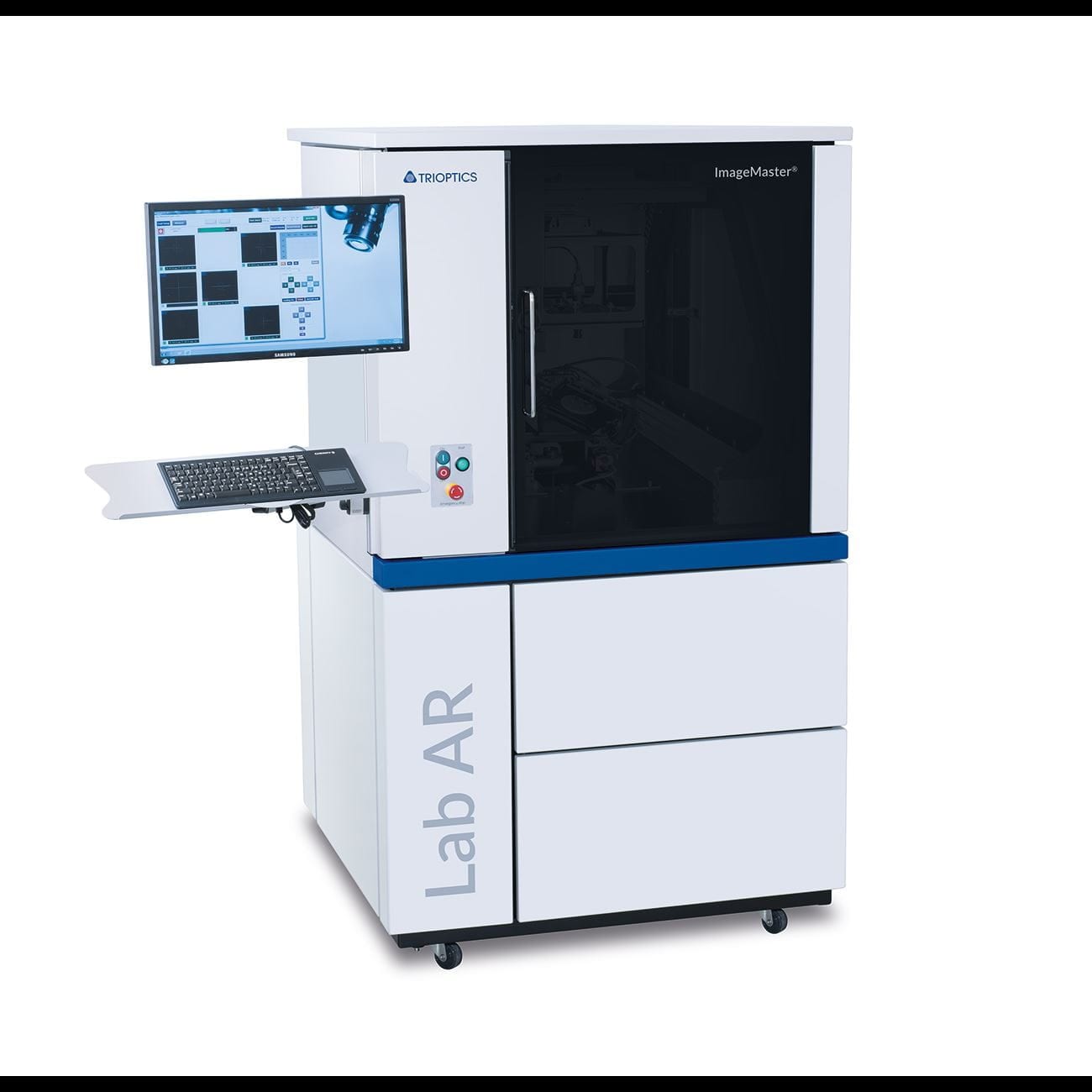

ImageMaster® VR Series replicate this function of the biological eye. A camera with adjustable focus transmits the generated test images to an image sensor. The camera takes over the function of the human pupil and the image sensor the tasks of the retina.